Initially posted on StorageNewsletter 20/5/2024

New edition in Europe, the 55th edition of The IT Press Tour took place in Rome, Italy, earlier this month and it was an opportunity to meet and visit 6 companies all playing in IT infrastructure, cloud, networking, data management and storage plus AI. Executives, leaders, innovators and disruptors met shared company strategy refresh and product update. These 6 companies are CTera, Fujifilm Recording Media, Know & Decide, Leil Storage, QStar Technologies and Quickwit.

CTera

In global file services for enterprises, CTera took advantage of the tour to provide a company and product update coupled with some key announcements.

Oded Nagel, CEO, shared the company’s 2023 picture with 2x new business and 30% growth in ARR, introduction of new key features, a new partner program, a very active partnership with Hitachi Vantara and the recognition of such market penetration with analysts’ reviews such as Coldago Research’s rankings in the Map 2023 for Cloud File Storage.

More and more adopted for various workloads, user groups and applications across a wide variety of industries and vertical segments, the product continues to evolve essentially around data services.

Covering some recent features and services, CTera delivered Migrate, Ransomware protection, Anti-Virus and WORM Vault plus the S3/NAS common content access and Analytics. Vault offers multiple modes to protect data at the cloud folder level: WORM, WORM + Retention in 2 flavors: Enterprise and Compliance mode. The ransomware protection is a must for all entities and the company’s flavor leverages AI to deliver a fast and reliable detection and remediation.

Saimon Mickelson, VP Alliances, spent some time introducing a new iteration around Vault with Vault 2 that brings 3 things: legal hold, object lock and chain of custody. Legal hold offers official capability for legal entities. Object lock operates at the file level and thus is more granular than the previous iteration. The last feature is a collaboration with Hitachi Vantara, a key partner for CTera, to provide the full report related to data migration. Vault 2 will be available in a few weeks.

The ransomware protection also has been extended with a data exfiltration prevention capability with the honeypot concept. The demo illustrated the simplicity of the setup and its effective operation. The idea is to detect and block in real-time any tentative data exfiltration based on dynamic decoy files that serve as honeypots to redirect attacks and help their identification to finally block them.

Aron Brand, CTO, has insisted on the direction the company has chosen around a deep integration of AI services to maximize the benefits of the CTera Data Platform.

The company continues to develop its enterprise file services platform at a very rapid pace confirming its place to offer one of the most comprehensive solutions today. It has clear ambitions in the domain wishing to become #1 in the cyber-storage area.

Fujifilm Recording Media

The tape manufacturer giant and industrial reference chose to cover Kangaroo, its data archiving solution, offered as a mobile appliance. It comes from the fact that cold data must be stored on cold storage which is not always the case and by cold data we really mean archived data. It confirms that tape is probably the best media for such data when long retention is needed, low TCO and energy savings. LTO roadmap disappoints users and limits adoption not aligned with the original plan. This is probably due to the form factor of tape drives and the back compatibility requirement or choice. A LTO9- offers today 18TB raw and 45TB compressed with a conservative 2.5:1 ratio. Let’s imagine when a new model will be introduced without these restrictions, the industry would probably receive a real capacity gain.

It’s worth mentioning that tape lost the capacity advantage it had in the past, recent HDDs reached 28TB and even SSDs pass the 60TB threshold. The non respect to the past LTO roadmap continued to create divergence in that dimension.

Kangaroo was designed by Fujifilm to address low TCO, resiliency, scalability, efficiency and easy integration for cold data. The solution embeds internal developed storage software named Object Archive coupled with server, disks, LTO tape drives and BDT tape library or autoloader. The device exposes a S3 API but also NFS or SMB and finally offers a S3-to-tape model. Kangaroo can be connected to remote sites and cloud instances. The team has chosen the Open Tape Format based on POSIX TAR that invites users to potentially read tapes on various systems and in the future.

The minimum capacity is 1PB and for archiving that accumulates data, it appears that the solution targets the enterprise and SME categories. The company plans to introduce a Kangaroo lite model with capacity starting at 100TB.

Fujifilm Recording Media also extends this solution with its Archive Services or FAS. It is a physical site in Kleve, Germany, with some tape libraries, without any network connection to the outside world. To maintain a good security segmentation, it’s impossible to ingest data digitally but just with tape imports in the vault. The site is ISO27001 compliant and tapes are not copied, they’re the original ones from users but of course other copies can be made as well. For users, everything is controlled via a specific web portal to initiate actions. For data exports and retrieve needs, a request can be made via the web portal, then an ESFTP server can be instantiated or tapes can be shipped.

Know and Decide

Founded in 2015 by Emmanuel Moreau, a French entrepreneur, Know and Decide designs and develops an IT asset management service for enterprises. He got the idea from his past experiences and connections with top CIOS from large European companies who clearly faced a real challenge trying to find a way to comprehensively discover, track, control and manage the lifecycle of any IT resources and logical services associated with them. And by IT resources it has to cover more than computers as the IoT became a serious headache for such large enterprises.

The solution developed is composed of 3 modules: the discovery, the management and the reporting one.

The first module, the discover one, collects dispersed information via an API but also collectors. Today more than 80 are available and a file import function has been added to ease offline documents and information ingestion from financial or contractual files among others. This module also connects to ERPs and other similar reference catalogs to feed the CMDB.

For data management, the product leverages information collected to force correlation and identify some records duplication and potential divergence and points out some anomalies. This is a key phase to converge to some improved consistency levels.

On the reporting aspect, it provides a global view of all IT resources and associated information collected during the discovery phase.

The product can be deployed on-premises and in the cloud with various distributed collect servers coupled with a central database and reporting interfaces. The philosophy is agent-less, how it can be the opposite, agent-based, to discover things when you don’t know what to discover. In other words, if you need to deploy an agent, it means you already identified the resource so the agent is useless.

The other goal of the solution promoted by CIOs is to really align the reality of deployed resources with the information present in contracts and declared offline, online…

The team applied its approach to 5 use cases that seem to be a good fit for it: Use case #1 is about a global vision of all IT assets, use case #2 touches the quality of the CMDB, use case #3 is related to the the production plan, use case #4 covers the security plan and use case #5 details budget to streamline the cost model.

Currently the product is already deployed and adopted by large customers in France, Belgium and Luxembourg. Moreau is looking for new partners that can penetrate some countries as all enterprises have the same issues. Another need appears to be around vertical industries and use cases that should be able to leverage the approach as well.

On the pricing side, a 3-year subscription model is used based on annual costs of CIs and it varies from 1 to €10 per CI per year.

We expect to see some AI extension to the model especially with natural language processing to add a verbal request engine to be able to deliver live answers to questions during executives and board meetings.

Leil Storage

The Estonian company is a young player in the secondary storage space surfing on the ESG requirements and enterprise goals. The team wishes to address 2 key metrics: capacity per rack unit and electric power per terabyte leveraging SMR HDD, erasure coding (EC) techniques, power control and distributed file system.

Speaking about secondary storage, energy consumption is a key element to consider as the objective is not to maintain up and running the storage unit for 10 years for instance. If you can control when and how you reduce or even eliminate that power dimension, you converge to what a passive media can deliver especially for archive or deep archive.

As a key partner with Western Digital with their past history at Diaway, the founding team selected SMR HDDs to gain capacity for the same footprint and started to implement a power control of them. Above this, architects create volumes or groups of disks and protect data in these with erasure coding. This EC model is based on Reed-Solomon and deployed with a classic 6+2 in a systematic way as said for each volume.

Not all drives at a system level are up at the same time, only a few, let’s say one volume for small files and one active one called standard. Their power controls are managed separately and when the standard one reaches a certain threshold, the volume is shut down and replaced by a new active one and it continues. This power management is sophisticated with 4 different levels and promoted by Leil as ICE for Infinite Cold Engine. We can estimate this as an advanced evolution of what we saw 20 years ago with some initiatives with Copan Systems, Nexsan, NEC, Fujitsu or even more recently with Disk Archive and its single drive approach power control.

Above volumes, the engineering team uses their internally developed distributed POSIX file system, named SaunaFS, inspired by the famous Google file system. They use the same philosophy more comparable with Colossus, with several metadata servers and dedicated data servers with client software for the client-metadata-data server operations and exchanges. Client software exists for Windows, Mac and Linux machines and NFS server based on Ganesha and S3 based on MinIO are available as well.

A file system is mapped to each erasure coded volume and a fine catalog keeps the file residence information. Each file is chunked and distributed to chunk servers with chunks of 64MB subdivided by 64KB blocks. This is fundamental especially when an access request is initiated touching a shutdown volume. SaunaFS offers a snapshot based on a copy-on-write model, advanced data integrity control with CRC32 and data scrubbing.

SaunaFS is open source but the power control is not and available only from Leil Storage when a storage array is purchased. A full rack of 8 nodes offers 15PB usable in EC 6+2 and 28TB SMR HDD coupled with a flash NVMe front-end.

Leil illustrates what we promoted for a few years with a 3 dimensions approach for secondary storage to be efficient: data reduction with compression and de-dupe, data durability with erasure coding and power and energy control.

QStar Technologies

The data archiving company picked the tour to officially announce Global ArchiveSpace, its scalable solution for large data volumes. The company has already demonstrated the product at SuperComputing 2023 and recently at the NAB show at the Hammerspace booth, both times and even at ISC 2024.

The company is a reference in the market existing for 37 years with a proven track record and more than 19,000 installations. Even with that huge installed base, the team suffers a bit from a lack of visibility even if specialists know them. The firm is profitable operating at its own pace.

QStar developed a series of products with Archive Manager (AM), the heart of the solutions set, Network Migrator, Archive Replicator and Object Storage Manager.

AM is the archive engine controlling storage units and the lifecycle of data submitted to it. It operates as a gateway in front of tape libraries, or cloud, exposing NFS, SMB or S3 as access methods. Gateway means also that AM is a disk cache accumulating data before an intelligent move to the tape library and the engineering team has designed a specific optimized disk file system for such need. It also is a S3-to-Tape flavor adopted by Hammerspace to be added and controlled by GDE but also by Cohesity, HYCU or Rubrik.

Beyond AM, the company’s archive approach is complemented by Archive Replicator to make remote copies of up to 4 storage units. It also exists Network Migrator working like a classic HSM in pull mode or via API or agent.

QStar also recognized that a new scalable approach is needed especially for very large environments and data sets and thus decided to build Global ArchiveSpace (GAS). It provides a cluster of nodes all working together to sustain the demanding archiving workload connected to large tape libraries with a significant number of tape drives. It scales from 3 to 64 nodes with embedded failover in case of failures and all nodes see the same single file namespace able to deliver operation services on all files. GAS implements a reservation method to protect tape drive usage and conflicts. Today the solution is limited to a single site and multi-node and will be extended to multi-site in the future to deliver a global data archiving approach if needed. Hammerspace could be potentially used in that case but QStar will have the opportunity to develop its own model. There is no Redundant Array of Independent Tapes, meaning that a file belongs to only one tape and thus there is file content distribution across cartridges. But the system has been developed to distribute directories with their related files in parallel to independent tapes drives to sustain high throughput. GAS is a strategic direction for the future of QStar.

Quickwit

Founded in 2020 in San Francisco, CA, by 3 co-founders, Paul Masurel, François Massot and Adrien Guillo, Quickwit raised so far $2.6 million for its seed round. The company designed and developed a highly scalable search engine that is distributed by design targeting large scale log environments.

Collecting all these logs that are important for IT operations and services, but not for the business itself as no production data is concerned, invites us to realize that these data volumes could be larger, by far, the data production environment. But to sustain a quality of service, prevent errors and even more anticipate some cyber attacks, having such fast search and indexing capabilities become paramount. This is a key component of the modern data observability approach.

Quickwit as the product is written in Rust and leverages Tantivy, a high performance full-text search engine library initiated by Paul Masurel, and used already by some key players on the market. The philosophy is stateless, fundamental characteristic when an architecture has to scale, and also schemaless.

Performance results are spectacular, at a crypto company with 1PB of logs per day indexed by Quickwit with 200 pods with 6 vCPUs, 8GB of RAM per pod, the product delivers 13.4GB/s and 14503371 docs/s. This company moved from OpenSearch and divided the cost by 5, storage cost by 2. Other examples of adoption are Fly.io, ContentSquare, Rho, Kalvad, PostHog and OwlyScan. As of today more than 200 companies use the product.

Competition is covered by several famous products such as Elastic, Splunk or Grafana/Loki which all offer some interesting features but not all at the same time. To summarize Quickwit provides fast search and fast analytics at low cost that is illustrated by the adoption of the solution by key users and partners. In other words, people who tried have adopted it.

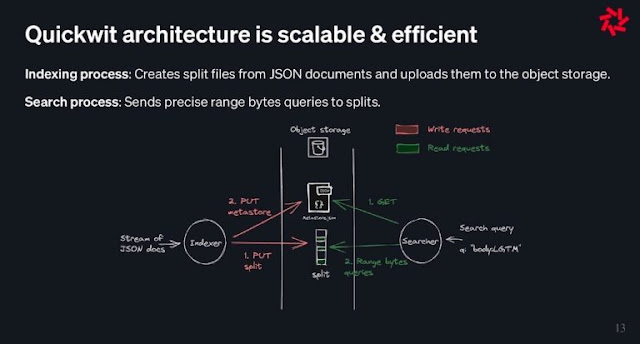

The product leverages object storage, we should say S3 storage, on-premises or in the cloud, with independent write/put and read/get requests respectively for indexing and search. At the heart resides the split files concept built from JSON documents that is stored on S3 with 3 data structures: row-oriented that enables fast document access, inverted index for fast lookups and columnar for fast analytics. All in one is one of the secret aspects of Quickwit.

In terms of business model, Quickwit is very opportunistic with direct sales, partnerships and community. The team is under a double license AGPL/Commercial in 2024 and an open-core license has been added in 2025 for specific enterprise features.

0 commentaires:

Post a Comment