Monday, June 30, 2025

Monday, June 09, 2025

Storadera opens a 3rd country

Thursday, June 05, 2025

HorizonH acquired Atempo

Tuesday, June 03, 2025

Quantum named a new CEO

Tuesday, May 27, 2025

The IT Press Tour #62 is back to California

Topics will be about IT infrastructure, cloud, networking, security, data management, big data, analytics and storage and of course AI as it is everywhere.

During this coming week, we'll meet 9 innovative companies and organizations:

- Cohesity, the new obvious global leader in Data Protection,

- DDN, the AI storage reference beyond its HPC storage leadership,

- Graid Technology, the pioneer in GPU-based RAID data protection,

- Hunch Tools, a emerging player developing AI tools,

- Lucidity, a fast growing FinOps player,

- Phison Electronics, the global leader in SSD and associated controller,

- PuppyGraph, a young analytics vendor promoting graph,

- Tabsdata, a new approach for data integration,

- and Ultra Accelerator Link Consortium, the authority for specifying interconnect between AI Accelerators.

I invite you to follow us on Twitter with #ITPT and @ITPressTour, my twitter handle and @CDP_FST and journalists' respective handle.

Thursday, May 22, 2025

Recap of the 61st IT Press Tour in London

Initially posted on StorageNewsletter May 16, 2025

The 61st edition of The IT Press Tour was held at Battersea Power Station in London, UK early April. The event provided an excellent platform for the press group and participating organizations to engage in meaningful discussions on a wide range of topics, including IT infrastructure, cloud computing, networking, security, data management and storage, big data, analytics, and the pervasive role of AI across these domains. Six organizations participated in the tour, listed here in alphabetical order: AppsCode, Auwau, DAOS, FerretDB, Quesma and Storadera.AppsCode

Founded in 2016, AppsCode is a relatively young yet ambitious player in the infrastructure control space instantiated by Kubernetes. At the helm is CEO Tamal Saha, a strong advocate for Kubernetes, with some time at Google, and its potential to redefine infrastructure management.

AppsCode’s journey began in 2015, right at the onset of Kubernetes’ emergence. From the start, the founding team saw a clear need: to deliver a Kubernetes-native, production-ready data platform. They recognized that as enterprises quickly embraced Kubernetes—both on-premises and in the cloud—they were seeking agility in deploying, configuring, and managing their IT environments. A major goal was to build infrastructure independence, allowing businesses to operate beyond the constraints of specific clouds, regions, or data centers.

However, while Kubernetes offers a robust stateless model, it falls short for many real-world enterprise scenarios where managing stateful workloads—like databases—is essential. Managing these workloads across clusters, with scalability and resilience, presents unique challenges. AppsCode’s response is a platform that extends Kubernetes capabilities to include robust database management, backup and recovery, secrets and user access management, and cross-cluster operations.

To address these needs, the company developed four core products:

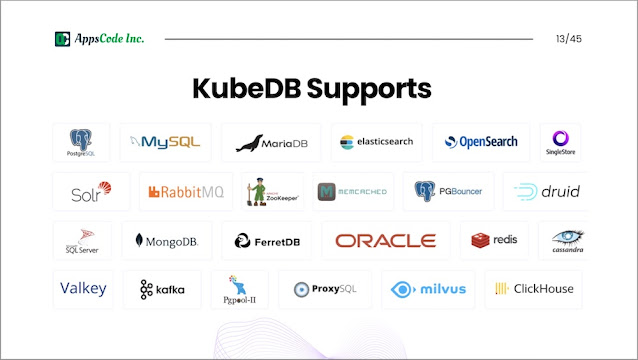

- KubeDB: A fully-featured database-as-a-service platform with an intuitive UI for provisioning and managing over 20 different database engines. It serves as an alternative to services like AWS RDS, but within users’ own infrastructure.

- KubeStash: A backup and recovery solution for Kubernetes workloads, encompassing databases and persistent volumes.

- Voyager: A traffic management solution built on HAProxy, focusing on load balancing and ingress routing.

- KubeVault: A tool for deploying and managing HashiCorp Vault on Kubernetes.

AppsCode experimented with various go-to-market strategies before settling on a simplified model: a single paid tier supported by a 30-day self-service trial. This approach has shown improved traction compared to earlier models. Additionally, the company tested a white-label strategy for KubeDB, which opened doors to promising OEM partnerships. Their primary audience now includes both end-users managing on-premises databases and potential OEM partners. Pricing is based on memory consumption, following a pay-as-you-go model that aligns with modern cloud-native economics.

Auwau

Auwau, a Denmark-based company founded in 2016, develops Cloutility—a backup management platform designed specifically for managed service providers (MSPs) and enterprise IT departments. CEO Thomas Bak led the discussion, highlighting the company’s focus and direction.

Despite being a very small team—just three people—Auwau has carved out a niche for itself with strong expertise in API integration for backup and data management solutions. Their core product, Cloutility, is a web-based, on-premises software platform that enables organizations to deliver Backup-as-a-Service (BaaS) capabilities in a secure, scalable, and efficient manner.

Cloutility is built as a secure, multi-tenant engine, providing centralized backup management through a modern dashboard. It supports integration with several major backup and storage platforms, including IBM Storage Protect, IBM Defender, IBM Cloud Object Storage, Rubrik, Cohesity, and PoINT Archival Gateway. Designed with flexibility in mind, Cloutility acts as a meta control plane over these data protection tools, allowing service providers and IT teams to manage multiple environments seamlessly.

The software operates on Windows and requires a Microsoft SQL Server instance. It supports APIs from select backup vendors and S3-compatible platforms. A defining feature of Cloutility is its hierarchical multi-tenancy model, which includes detailed user role management, enabling fine-grained access control across organizational layers.

Implementation is highly collaborative—Auwau works closely with integrators, independent software vendors (ISVs), and MSPs, typically completing integrations in about three weeks. The team emphasizes project-based engagement, tailoring the deployment to each specific use case. New integrations are already in development, indicating continued momentum and responsiveness to market needs.

While Auwau’s annual revenue remains under €1 million, this is seen as a strong achievement given its compact team and highly specialized focus. Looking ahead, OEM partnerships are clearly a strategic priority. CEO Thomas Bak is actively seeking new partners to expand Cloutility’s reach and accelerate growth in the backup and data management space.

DAOS

DAOS—short for Distributed Asynchronous Object Storage—is a high-performance storage architecture initiated by Intel in 2012 following its acquisition of Whamcloud. The project has steadily evolved over the years and Johann Lombardi, chair of the Technical Steering Committee, provided a comprehensive update on the project’s status and strategic direction.

In 2023, a major milestone was achieved with the creation of The DAOS Foundation, a new independent entity established to steward the ongoing development of DAOS as an open source project. This transition came after Intel wound down its Optane product line and began reducing its direct involvement in DAOS. The Foundation now oversees governance, funding, and community building, ensuring DAOS remains vendor-neutral and sustainably developed. Its current members include Argonne National Laboratory, Google, HPE, Intel, and the recently joined Vdura, each contributing in various capacities to the project’s roadmap and ecosystem.

DAOS has always been built with hyperscale performance in mind. To that end, the architecture incorporates several forward-thinking design decisions. It eliminates costly operations such as read-modify-write, opting instead for a versioning model and multi-version concurrency control (MVCC) to avoid locking. It also foregoes centralized metadata servers and global object tables to ensure maximum scalability. Each storage node runs its own instance of the DAOS engine and communicates with compute clients using user-space libraries (libdfs or libdaos). This design allows thousands of clients and storage servers to interact concurrently at scale.

Interface support is broad, covering POSIX, NVMe-oF (block), MPI-IO, S3, and Hadoop, enabling DAOS to serve a wide range of high-performance and enterprise workloads. The system also supports typed datasets, enabling tailored configurations for use cases involving storage pools and multi-tenancy—critical features at hyperscale.

Data protection is ensured through both replication and erasure coding, providing resilience and data durability across massive infrastructures. This makes DAOS a preferred backend for some of the world’s fastest supercomputers. Notably, it powers the #1-ranked Aurora system at Argonne National Laboratory, as highlighted in the IO500 benchmark list.

Looking ahead, the DAOS Foundation is preparing for a major reveal at the SC25 conference this November in St. Louis, Missouri, where it will launch DAOS 2.8, the next significant step in the platform’s evolution.

DAOS continues to represent one of the most advanced open-source object storage initiatives on the market—purpose-built for the era of exascale computing and beyond.

FerretDB

MongoDB has become a dominant force in the document database space, effectively holding a monopoly within the NoSQL landscape. Originally launched in 2007 as 10Gen, MongoDB started as an open source project aiming to revolutionize database systems. A major shift occurred in 2014 when Dev Ittycheria became CEO, steering the company toward a commercial focus. The open source strategy was eventually abandoned, and MongoDB adopted a closed-source licensing model. The company went public in 2017 on the Nasdaq under the ticker MDB and has since grown into a $13 billion market cap company, reporting over $2 billion in annual revenue. However, this shift left many early users disillusioned—while MongoDB’s product was technically compelling, the change in licensing and increased vendor lock-in pushed some to seek alternatives.

One such alternative is FerretDB, a promising open-source project designed to offer MongoDB compatibility without the restrictive licensing. Founded by a team including Peter Farkas (CEO), the presenter, Alexey Palazhchenko (CTO), and guided by advisor Peter Zaitsev (former CEO of Percona), FerretDB emerged in response to MongoDB’s switch to the Server Side Public License (SSPL), which is viewed by many as commercially limiting.

FerretDB was launched in late 2021 and faced immediate legal pressure from MongoDB, which ultimately failed to halt the project. Built in Go, FerretDB provides a drop-in replacement for MongoDB but uses PostgreSQL as the underlying database engine. It is fully open source under the Apache 2.0 license and can be deployed on-premises or in the cloud.

FerretDB allows applications written using MongoDB’s MQL (MongoDB Query Language) to function without modification, giving developers a seamless transition path while avoiding lock-in. To date, over 4,000 known instances are running FerretDB, although the actual number is likely higher, as only those with telemetry enabled are counted. With a core team of just eight, the project has attracted over 200 contributors and earned 10,000+ stars on GitHub—a testament to the community’s interest.

In 2023, FerretDB initiated a collaboration with Microsoft to explore ways to counterbalance MongoDB’s dominance. Their work reinforces the broader industry need for an open standard in document databases to ensure portability, transparency, and freedom of choice.

FerretDB is more than a product—it’s a movement to restore openness and fairness in the document database world.

Quesma

We were exposed to Quesma, a young and dynamic software company based in Poland, during the Boston edition last October, through their involvement with Hydrolix, a company that relies on Quesma for a critical component in its data platform. That initial connection sparked curiosity, and this time we had the opportunity to dive deeper into what Quesma offers.

We met with Jacek Migdal, Quesma’s founder and CEO, who provided insights into the company’s journey and evolving product strategy. Founded in late 2023, Quesma is still in its early stages but has already secured $2.5 million in funding and built a small yet highly skilled team of eight. Their collective expertise lies in structured data, SQL, and database back-end technologies. Despite various industry attempts to replace SQL over the years, the language remains a staple due to its ubiquity and robustness. However, Quesma is tackling the back-end challenge—rethinking how databases serve modern needs in areas like logs, traces, metrics, and event data.

Quesma’s core product is a middleware layer that acts as a smart gateway for database queries. Instead of having applications communicate directly with the database, Quesma intercepts and processes queries through its query service layer. This approach offers significant flexibility, especially for integration and migration use cases, allowing backend changes without touching the application layer. This innovative architecture has already caught the attention of major players like Hydrolix and ClickHouse, both of whom have adopted Quesma’s technology.

One of the team’s upcoming innovations is SQL Pipe Syntax, inspired by a Google research paper. The concept aims to enhance SQL performance by optimizing command flow and aligning semantic order in queries, potentially delivering more efficient execution paths.

Quesma’s solution is available on GitHub, reinforcing its open development ethos. The pricing model is usage-based and depends on the stack. If users run on an open-source backend, Quesma is free to use. For proprietary stacks, pricing is set at $30 per terabyte per month, offering a clear and predictable cost structure.

In a world increasingly driven by data complexity, Quesma offers a clever abstraction that brings agility and performance to database interactions—without disrupting the existing application architecture.

Storadera

Storadera, an emerging cloud storage provider from Estonia, first presented during its inaugural European edition in Paris in September 2022—our 45th tour globally. At that time, Storadera was positioning itself as a fresh alternative to established cloud object storage players such as AWS S3, Wasabi, and Backblaze B2. Fast forward to the 61st edition of the tour, we had the chance to reconnect with CEO and founder Tommi Kannisto for an insightful update on the company’s growth and roadmap.

Since our first encounter, Storadera has made notable progress. It has expanded its infrastructure footprint by launching a new data center in Amsterdam, supplementing its original facility in Tallinn. The company is actively seeking strategic partners to support the deployment of additional data centers and is open to raising capital to fuel its growth ambitions.

At its core, Storadera offers a cloud object storage service built around an S3-compatible API, optimized for data protection workloads such as backup and archiving. The system architecture is focused on performance—especially write-heavy workloads—with a software stack written in Go. Unlike many competitors, Storadera doesn’t rely on front-end gateways or proxies. Instead, it connects servers directly to the internet and uses locally attached JBODs—currently Western Digital Data102 systems with conventional magnetic recording (CMR) hard drives.

This approach introduces a challenge: small file performance. Since most enterprise backup solutions (e.g., Veeam) often generate numerous small files, HDDs—typically delivering 150–200 IOPS—can become a bottleneck. Storadera’s response to this lies in one of its core innovations: Variable Block Size Technology. Inspired by some innovative developments on the market, this software-defined approach temporarily caches data in memory, batching writes into larger, sequential chunks that maximize HDD throughput—making HDDs behave more like SSDs in terms of small file performance.

Resiliency is handled via local erasure coding (Reed-Solomon) with optional geo-replication. Storadera supports configurations like 4+2 or 6+2, meaning data is split and distributed across a minimum of three servers, ensuring durability even if two drives fail. This design not only enhances fault tolerance but also helps improve throughput when managing fragmented or small-sized data.

Beyond the core architecture, Storadera delivers enterprise-grade features such as S3 v4 API compatibility, object lock for compliance, built-in data integrity checks, encryption at rest and in transit, and ongoing plans to introduce AI-driven storage lifecycle management for smarter reads and deletes. The team is also exploring host-managed SMR HDDs to boost storage density and efficiency, aiming for long-term data retention with lower power usage and improved margins.

A key value proposition is Storadera’s simple and transparent pricing: €6 per terabyte per month—with no charges for ingress or egress traffic, making cost forecasting straightforward. Looking ahead, the company plans to expand further in Europe with a German facility in 2025, while considering entry into the UK, U.S., Canada, and APAC markets.

Storadera continues to demonstrate that thoughtful software engineering combined with smart hardware choices can create a compelling, cost-effective alternative in the cloud storage space.

Tuesday, April 15, 2025

Kubernetes control from AppsCode

AppsCode, a young player in infrastructure control founded in 2016, participated recently in The IT Press Tour, organized in London. It was the perfect time, during KubeCon, to get a company and product update. Tamal Saha, its CEO, is a true believer in Kubernetes.

AppsCode, a young player in infrastructure control founded in 2016, participated recently in The IT Press Tour, organized in London. It was the perfect time, during KubeCon, to get a company and product update. Tamal Saha, its CEO, is a true believer in Kubernetes.

Wednesday, April 09, 2025

Auwau develops an universal backup control center

Monday, April 07, 2025

Storadera as an European alternative to AWS and Wasabi

Thursday, March 27, 2025

The IT Press Tour #61 will take place at the famous Battersea Power Station

- AppsCode, a young Kubernetes IT operations player,

- Auwau, recent cloud backup operations management vendor,

- DAOS, a reference in hyper performance storage infrastructure,

- FerretDB, a emerging player developing a compatible MongoDB DocumentDB engine,

- Quesma, a specialist in portable SQL across applications,

- and Storadera, the alternative to AWS S3 and Wasabi.